Table of Contents

- Big picture: ways to classify AI

- Capability‑based types

- Technique / function‑based types

- 1. Artificial Narrow Intelligence (ANI)

- 2. Artificial General Intelligence (AGI)

- 3. Artificial Superintelligence (ASI)

- 4. Machine Learning & Deep Learning

- 5. Generative AI

- Other important AI categories

- Quick comparison table

- Final Conclusion

Types of AI span from today’s narrow, task‑specific systems to speculative superintelligent agents that could outperform humans across every domain. Understanding each type Generative AI, traditional Machine Learning, AGI, ASI, and others which helps you evaluate capabilities, risks, and use cases more clearly.

Big picture: ways to classify AI

AI is commonly grouped along two axes: by capability level (Narrow, General, Superintelligence) and by technique (machine learning, deep learning, generative models, etc.). This section gives you a map you can reuse in different articles or diagrams.

Capability‑based types

● Artificial Narrow Intelligence (ANI) – specialized systems for a limited task or domain, like recommendation engines or image classifiers.

● Artificial General Intelligence (AGI) – hypothetical human‑level systems that can learn and reason across many domains.

● Artificial Superintelligence (ASI) – speculative systems that would surpass human intelligence in virtually all cognitive tasks.

Technique / function‑based types

● Symbolic AI (Good Old‑Fashioned AI) – rule‑based expert systems and logic engines.

● Machine Learning (ML) – systems that learn patterns from data instead of explicit rules.

● Deep Learning (DL) – ML using multi‑layer neural networks for complex patterns like vision and language.

● Generative AI – models that can synthesize new text, images, audio, video, or code.

1. Artificial Narrow Intelligence (ANI)

What it is?

ANI (also called Weak AI) is designed to perform a specific task extremely well, without general understanding outside its domain. Virtually all production AI systems today are ANI, even if they look very smart.

Key characteristics

● Task‑specific: optimized for a narrow, well‑defined problem (e.g., fraud detection, speech recognition).

● High accuracy in scope, poor transfer: excels within its training domain but cannot easily apply knowledge elsewhere.

● Data‑dependent: performance hinges on volume and quality of labeled or interaction data.

How it works (simplified)

● Data collection – gather task‑specific data (transactions for fraud, images for vision, text for classification).

● Model training – use ML or deep learning algorithms to learn a mapping from inputs to outputs.

● Evaluation & deployment – validate performance and deploy as APIs, embedded models, or on‑device systems.

Why it matters?

ANI is where the economic value of AI is being realized now, powering automation, personalization, and analytics across industries. It is also the safest and most controllable form of AI currently deployed at scale.

Applications & examples

● Search and recommendation: e‑commerce and streaming recommendations.

● Computer vision: face unlock, quality inspection in factories, medical imaging triage.

● NLP utilities: spam filters, sentiment analysis, document classification.

● Autonomous systems: self‑driving modules, warehouse robots (still narrow, domain‑specific).

2. Artificial General Intelligence (AGI)

What it is?

AGI describes a hypothetical AI system with human‑level understanding and problem‑solving across a wide variety of tasks and domains. It would be able to transfer learning flexibly and reason about novel situations.

Key characteristics

● Broad competence: capable of learning and performing any intellectual task that a human can.

● Transfer and abstraction: can generalize knowledge across domains without extensive retraining.

● Autonomy: can set sub‑goals, plan, and adapt dynamically, not just follow fixed patterns.

How it might work (current thinking)

● Integrating diverse capabilities—language, vision, action, memory—within unified architectures.

● Using advanced learning paradigms that more closely resemble human learning, such as cumulative, contextual, and experiential learning.

● Scaling data, compute, and algorithmic efficiency while improving alignment and control techniques.

Why it matters?

AGI is seen as a potential inflection point for the economy and society, enabling unprecedented levels of automation and scientific discovery. It is also central to debates on AI safety, alignment, and governance.

Applications & examples (hypothetical)

● General‑purpose digital workers that can switch between roles (analyst, coder, designer) with minimal guidance.

● Adaptive physical robots that can learn new tasks on the fly in homes, factories, and public spaces.

● Automated scientific research systems generating and testing hypotheses across disciplines.

3. Artificial Superintelligence (ASI)

What it is

ASI describes an even more speculative level where AI systems surpass the best human minds in virtually every field, including creativity, strategic planning, and social intelligence. It implies sustained self‑improvement and capabilities beyond human comprehension in many areas.

Key characteristics

● Superhuman performance: outperforms humans across most cognitive dimensions—speed, accuracy, memory, creativity.

● Recursive self‑improvement: can improve its own architecture and learning efficiency, potentially leading to an “intelligence explosion.”

● Opaque decision‑making: behavior and internal reasoning may become difficult or impossible for humans to fully interpret.

How it might work (speculative)

● Building on AGI with architectures that support large‑scale self‑optimization and autonomous research.

● Using synthetic data generation, simulated environments, and continual self‑play to expand capabilities beyond human training data.

Why it matters?

ASI sits at the center of long‑term AI risk discussions, from existential safety concerns to governance of highly capable systems. It also underpins optimistic visions of breakthroughs in healthcare, climate, and space exploration.

Potential applications (speculative)

● Highly personalized medicine with real‑time treatment design and molecular‑level reasoning.

● Advanced climate engineering, energy optimization, and large‑scale infrastructure planning.

● Space exploration, autonomous colony design, and long‑horizon scientific programs.

4. Machine Learning & Deep Learning

What they are?

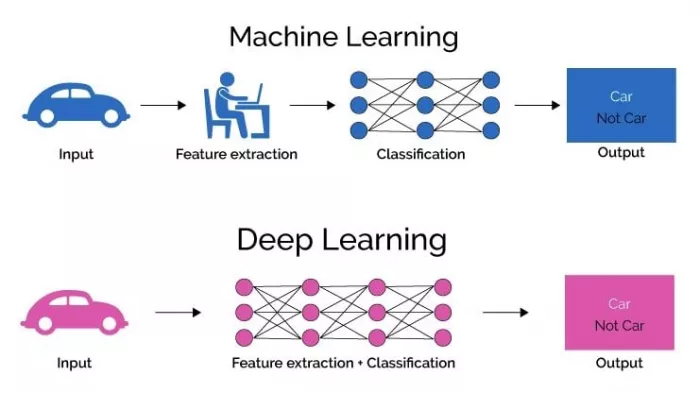

Machine Learning is a broad approach where systems learn to make predictions or decisions from data rather than hard‑coded rules. Deep Learning is a subfield using multi‑layer neural networks to model complex, high‑dimensional patterns.

Key characteristics

● Data‑driven learning: models adapt their parameters based on examples and feedback.

● Probabilistic outputs: predictions are typically expressed as probabilities, not certainties.

● Performance scales with data and compute: more data and larger models often yield better accuracy up to a point.

Why it matters?

ML and DL are the underlying engines behind generative AI, modern vision, speech recognition, and recommendation systems. Understanding them helps you distinguish “AI as marketing” from actual technical capability.

High‑level mechanics

● Supervised learning – learn a mapping from labeled examples (e.g., images with class labels).

● Unsupervised learning – discover structure in unlabeled data (clustering, embeddings).

● Reinforcement learning – learn a policy by trial and error to maximize rewards in an environment.

● Deep neural networks – stacks of layers that transform inputs into representations, trained by backpropagation.

Applications & examples

● Credit scoring, churn prediction, demand forecasting.

● Image classification, object detection, OCR.

● Speech‑to‑text, voice assistants, keyword spotting.

● Core components inside generative models like large language models (LLMs) and diffusion models.

5. Generative AI

What it is?

Generative AI refers to models that can create new content—text, images, audio, video, or code—by learning patterns from large datasets and sampling from those learned distributions. It is powered by large “foundation models” like LLMs and multimodal architectures.

Key characteristics

● Content synthesis: can generate coherent text, realistic images, synthetic voices, or videos from prompts.

● Multimodal potential: many newer models handle multiple input/output types (text, image, audio) within one model.

● Few‑shot adaptability: can be adapted to niche tasks with relatively little task‑specific data.

How it works (conceptual)

● Pretraining: a large neural network (often a transformer) is trained on massive corpora to predict the next token (for text) or pixel/latent pattern (for images).

● Fine‑tuning & alignment: additional training with curated data, human feedback, or reinforcement learning from human feedback (RLHF) to shape behavior.

● Inference: at runtime, the model samples outputs token‑by‑token or step‑by‑step, guided by probabilities learned during training.

Why it matters?

Generative AI is transforming content creation, software development, design, and knowledge work by reducing draft and ideation time. It also raises critical questions around copyright, bias, hallucination, and synthetic data governance.

Applications & examples

● Text: chatbots, copywriting, summarization, coding assistants like GitHub Copilot.

● Images & design: AI art, product mockups, advertising creatives, floor‑plan generation in architecture.

● Audio & video: voice cloning, dubbing, music generation, synthetic actors.

● Science & industry: drug discovery, materials design, synthetic data generation for model training.

Other important AI categories

You will often see other labels that cut across the types above and help readers frame current systems.

● Reactive vs limited‑memory vs theory‑of‑mind vs self‑aware AI: a conceptual ladder describing increasing sophistication in memory and awareness, used in some academic and educational materials.

● Symbolic vs sub‑symbolic AI: rule‑based logic systems versus statistical/neural models; many modern systems are hybrid.

● Agentic AI: systems that use models plus tools, memory, and planning loops to take multi‑step actions (e.g., software agents).

Quick comparison table

| Type | Capability level | Characteristics | Status today | Typical / example uses |

| ANI | Narrow, task‑specific | High accuracy in one domain, poor transfer, data‑driven | Dominant form of real‑world AI. | Recommendations, fraud detection, vision, speech utilities. |

| ML / DL | Underlying techniques | Learn patterns from data; deep nets for complex signals. | Mature, widely deployed | Classification, regression, detection, embeddings. |

| Generative AI | Content‑creating ANI | Synthesizes text, image, audio, code from prompts | Rapidly growing, strongly commercialized | Chatbots, code assistants, design tools, synthetic data. |

| AGI | Human‑level generality | Broad reasoning, transfer learning, autonomy | Not yet achieved; active research and debate. | Hypothetical digital workers, adaptive robots, general research |

| ASI | Beyond human | Superhuman across domains, self‑improving. | Purely speculative; raises major safety questions | Hypothetical: advanced science, space, macro‑governance |

Final Conclusion

AI today spans a spectrum from narrow, task‑specific systems to speculative superintelligence, and each type plays a distinct role in how technology shapes our lives and work. Narrow and generative AI, powered by modern machine learning and deep learning, already deliver tangible value in automation, creativity, and decision‑making, while AGI and ASI remain powerful but uncertain horizons that drive ongoing debates about safety, governance, and long‑term impact.

Understanding these categories—how they work, where they excel, and where their limits and risks lie—helps you cut through hype, choose the right tools for real‑world problems, and ask sharper questions about ethics and regulation. As AI capabilities continue to evolve, the most important skill for individuals, businesses, and policymakers will be learning to work with these systems responsibly, leveraging today’s narrow and generative models while preparing for more general and powerful forms that may emerge in the future.

Comments