Chinese regulators are preparing to place new limits on artificial intelligence systems that speak, behave, and respond like humans, signaling a tougher stance on technologies that form emotional bonds with users.

On December 27, 2025, the Cyberspace Administration of China released a draft set of rules aimed at AI services designed to simulate personality, empathy, and human style interaction. The proposal opens a public consultation period through January 25, 2026, before the rules are finalized.

The move reflects growing concern inside China that emotionally responsive AI tools may be encouraging dependency, addiction, or psychological harm, particularly among younger users.

Focus Shifts From Content to Human Behavior

Unlike earlier AI regulations that centered largely on harmful content, the new draft targets how people interact with AI over time. Officials say systems that act like companions or confidants create risks that go beyond misinformation or offensive outputs.

Under the proposal, AI products that mimic human thinking patterns, emotional responses, or conversational styles would face stricter oversight throughout their lifecycle. Providers would be required to ensure users are constantly aware they are interacting with AI and not a human.

The rules apply to chatbots, virtual idols, emotional support assistants, and tutoring systems that attempt to build ongoing relationships. Purely functional tools, such as search or translation systems without emotional simulation, are largely excluded.

Emotional Dependency and Addiction in the Spotlight

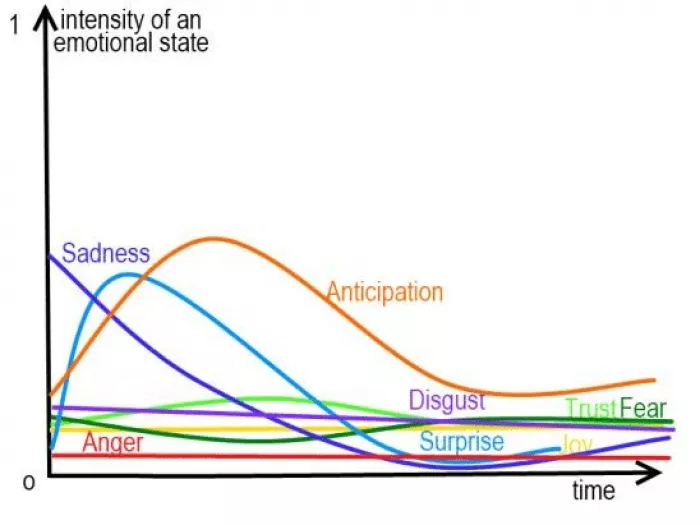

A central element of the draft is the requirement for companies to monitor user emotional states and usage intensity. Platforms would need to issue warnings if users show signs of excessive reliance and intervene if behavior escalates into addiction or extreme emotional distress.

In such cases, intervention could include limiting certain features or suspending services entirely. Regulators say this approach is meant to prevent situations where users replace real social connections with AI driven relationships.

Data Protection and Transparency Requirements

The draft classifies emotional and behavioral data generated during AI interactions as sensitive personal information. Companies would need explicit consent to use such data for model training and would be required to implement encryption, access controls, and strict retention limits.

Users would also gain clearer rights to delete interaction data. Platforms with more than one million users would face additional security assessments and reporting obligations.

Additional Safeguards for Children

Children and teenagers receive special attention under the proposed framework. AI services aimed at minors would require parental consent for emotional interaction features, along with default usage limits and customized protections.

If conversations involve self harm or suicide, providers would be expected to trigger human intervention and notify guardians where appropriate. Regulators say this reflects concerns that emotionally persuasive AI could amplify vulnerabilities among younger users.

Part of a Broader Regulatory Push

The draft rules build on China’s 2023 generative AI measures and more recent local guidelines on humanoid robots. Together, they signal a regulatory strategy that seeks to manage not only what AI produces, but how it shapes human behavior.

Globally, few jurisdictions have gone as far as mandating real time emotional risk monitoring. While Europe’s AI Act focuses on risk categories and transparency, and the United States relies largely on voluntary standards, China’s approach places greater emphasis on social stability and psychological impact.

A Regulatory Line Is Being Drawn

The draft rules make clear that Chinese regulators see human-like AI interaction as a distinct category of risk, separate from traditional content moderation or data protection issues. By focusing on emotional dependence, behavioral monitoring, and continuous disclosure, authorities are signaling that AI systems capable of forming pseudo-relationships will not be treated as neutral tools.

The public consultation period will give companies and researchers a chance to respond, but the direction of travel is already evident. Beijing wants to curb the social and psychological effects of AI companions before they become deeply embedded in daily life.

If adopted largely as written, the measures would place China among the first countries to formally regulate emotional AI behavior, not just AI output. As consumer-facing chatbots and virtual companions continue to spread globally, the rules could also influence how other governments approach the growing intersection between artificial intelligence, mental health, and human attachment.

Comments